Release #4: Terminology, Frameworks and Standards - Part 2

Incidents Response Process

ISO 27035 defines a high-level incident reponse process, but personally I prefer NIST SP 800-61 "Computer Security Incident Handling Guide", which has the following steps and process flow.

(Source: NIST CSRC)

The process you should use, and that is not limited to the incident response process, should always match your current environment (IT Governance). For example, if ISO is heavily used in your organisation, use it too. If there is no such requirement in your company, look at what your tools support out of the box.

Sometimes it is okay to mix frameworks, for example a ISO 27001 certification does not demand that you have to use ISO 27035, you are free to use another well-known process which fulfills the requirements.

| Info Box | Creating hardened Systems |

|---|---|

| ! | The implementation of the preventive measures to harden an IT system are complex and should be embedded in the existing Corporate Governance processes, such as the Information Security Management (ISM) itself. It is advisable for different system types (server, desktop, ATM, etc.) as well as operating systems to maintain a corresponding so-called "gold image" or "basic image" or profile (or whatever your provisioning system calls it). The base image should be customized so that privileged users, services, and tools have been removed as much as possible and the default configuration hardened as much as possible. For this purpose, you can follow guidelines such as the "CIS Benchmark" (https://www.cisecurity.org/cis-benchmarks/ ) and/or the STIG* (Security Technical Implementation Guide) of the DISA** (Defense Information Systems Agency, a Combat Support Agency of the US Department of Defense (DoD)) and also carry out further hardening by specialists. To this basic image other packages (e.g. Server image + web server package) can be added with their own hardened setup. Later during the runtime of the system the administrator should be granted a temporary right for the duration of the administrative activity, by the formal approval of his superior or by the system manager, which allows him to carry out the work. A protocol (with timestamps, duration of access, and a description of the tasks and purpose) must be drawn up for this purpose. This protocol can help during the investigation of a security incident. Selecting "reasons for resolving" when closing incidents, like software bugs in the Bugzilla (https://www.bugzilla.org/about/), is a useful feature to better understand problem fields in your environment, create better statistics and to get a quick overview of what happened. Deviations from this hardened setup can be monitored by a so called compliance scanner. Different compliance rules should be created for each system type (see above, the "basic image" + the add-ons, or with different configurations for provisioning tools (Ansible, Puppet, Kubernetes, ...) |

Diamond Model

The "Diamond Model" helps to describe an attack by not only limiting the analyst to the hard facts, as with the TTPs, but also considering motivation and context.

The "Diamond Model" helps to describe an attack by not only limiting the analyst to the hard facts, as with the TTPs, but also considering motivation and context.

Each security event is a "diamond" and consists of four "corners": Adversary, Infrastructure, Capability, and Victim.

There are two orthogonal axises.

The Diamond Model juxtaposes the attacker (Adversary) and the victim (Victim) on a social-political axis. There is often (not always) a relationship between victim and attacker, sometimes the relation is stronger (government hackers want to steal trade secrets) and sometimes weaker (mass scan for vulnerable software, e.g. Netscaler or MS Exchange). This assumption (or speculation) allows to get an indication of the intention.

On the other axis, the technological axis, connects the attacker's infrastructure with the capabilities of the attacker. These are the attacker's techniques, tactics and procedures (TTPs), such as a distributed denial of service (DDoS) attack over a rented botnet, social engineering (or PSYOPs, psychological warfare) or malware that connects to a command-and-control server.

Of course, it is more effective to think about these relationship and intention in advance so that preventive action can be taken, e.g. blocking known IPs used by malware or an energy producer should not unnecessarily disclose the number of customer, name of suppliers and partners, or research projects and thus generate interest from criminals.

A CDC analyst can use the model to organize and complete their analysis goal. The model helps to ask the questions "How?" and "Why?" to broaden the view beyond the limited scope of a security event.

Here is an example for an analysis.

The CDC analyst, the Intrusion Detetction System (IDS), or the AV/EDR software (1) detects malware that has entered the victim's system via a job offering received over a LinkedIn recruiter contact. The malware was able to install itself and (2) establish a connection to the Command-and-Control (C2) network. The analyst determines the URL and (3) the associated infrastructure, e.g. the IP address, and manages to locate the owner of the IP via a database query (whois, Google, etc.) (4).

Now it remains to be clarified what the motivation of the attack might be and what further TTPs the attacker, or the group, uses, to find further indications of a theft (Indicators of Compromise, IoCs). Checking the MITRE ATT&CK database and Threat Intelligence sources might give a hint.

Pyramid of Pain

The Tactics, Techniques and Procedures (TTPs) of attackers and the corresponding "Indicators of Compromise" (IoC) can be used to detect a successful attack. The IoCs are e.g. paths and file names used by the malware, or hashes that are usually found in the RAM of the computer system, but sometimes it is also simply a URL to a C2 server that appears in the log files of a web proxy.

The IoCs have different categories and qualities. The higher the quality the harder it is to know and to detect them.

Ok, here is another "war story": Once we received a warning about a North Korean nation-state hacker group, that was is very active in Germany at that time, from the Bundesamt für Verfassungsschutz (BfV, https://www.verfassungsschutz.de/en/index-en.html , the domestic intelligence service of the Federal Republic of Germany). The report was forwarded by the Bundesamt für Sicherheit in der Informationstechnik (BSI, https://www.bsi.bund.de/EN/TheBSI/thebsi_node.html , Federal Office for Information Security) which gives the included information an extra quality check - at least this was my assumption. The report contained IP adresses of malware as IoCs and now it gets interesting. A subsidary company used the IPs in their SIEM and get shocked as many traffic to the C&C servers become instantly visible. It was a bit like switching on the light in a hospital kitchen and every cockroach runs away to hide. They had to inform the upper management because it looks like a massive APT compromise. This leads to high attention from above, extra pressure, long working hours, regular reporting to management and so on.

What my team did was clever; our analysts checked the IPs together with an external source and realized that most of the IPs where from the Mircosoft software update Content Delivery Networks (CDNs), and then removed the known-to-be-good adresses, with the result that we saw much less traffic (just a few matches) compared to our subsidary; matches that turned out to be false positives during the analysis process.

Unfortunately the subsidary company informed us about the many alarms they see after some days and it took another day to realize that they haven't done the quality check of the IoCs - lesson learned for both teams. End of story.

OODA Loop

The OODA loop comes from the military field and has found its way to different other domains meanwhile. It serves as orientation and decision-making aid for unknown events, like being a figther pilot that suddenly meets an advisary aircraft.

OODA means:

|

|

In information security (info-sec), the model is often simplified, and is intended to help security analysts to orientate themselves and make decisions, even if they are confronted with something supposedly new.

John Boyd's real idea is often ignored in the info-sec community, namely to predict the opponent's behavior and to mislead/control the opponent through ambiguous or changed situations, thus distorting the opponent's resources and focus.

For the protection of a company, you can certainly think how the company is perceived from the outside and develop preventive measures so that the company does not become attractive to a potential attacker (at the same time you can create various attacker "personas" for your risk analysis process). However, this intervention is in stark contrast to the fundamentals of economics, because if a company does not advertise with its skills, it is difficult to acquire new customers. This is certainly conceivable in the case of the military, public authorities or companies that do not compete with the free market. But here, too, self-promotion must be carried out in order to be attractive, for example for new employees or to show the organisations status in the hierarchy of their system.

A better way would be to selectively omit or falsify information. For example, an energy company can say how many customers they serve and coal-fired power plants are operated and that they are part of the critical infrastructure in Germany. On other pages you will find references to manufacturers, news about redundancies or outsourcing in IT or power plant protection. It could be better to present the most necessary facts objectively and to inform about social projects and the support of local innovation fairs, start-ups, or universities for emotional advertising. The principle can even be driven further by offering representative office buildings for events, customer traffic, meetings with manufacturers, etc., but hiding the actual crown jewels in inconspicuous, functional buildings within the city or in industrial areas, where the owner is only recognized by a small sign on the main entrance if even at all. In addition by deterrence with the help of surveillance cameras, high fences, and guards walking around with dogs at night you can give the building a "nah, not that cool" image. For professional espionage and criminals you need of course more measures inside.

Let's leave the world of James Bond and the cold war era and come back to cyber defense.

Studying the behavior of attackers by using the MITRE ATT&CK framework, the Unified Kill Chain, reports on real cases from security companies and so on helps understanding the attackers and helps to create attacker "personas".

This information can also be used for cyber deception, honeypots, honey tokens, manipulated documents, and so on. This strategy deserves a book on its own and I can recommend: "Cyber Denial, Deception and Counter Deception - A Framework for Supporting Active Cyber Defense"

The question of whether the OODA loop helps with containment and the acute defense against attacks is something I cannot answer and it sounds too theoretical than to be useful during an incident. But from experience I can say that information about attacker's TTP and intention is one of the most burning questions during an incident.

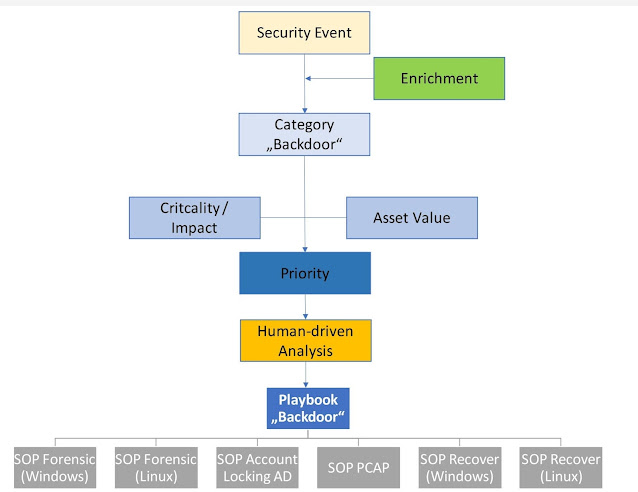

Playbooks and Standard Operating Procedures

In addition it should contain all best practices and knowledge to help the CDC analyst to fulfill the triage (initial incident analysis) as well as a deeper analysis. This combines all the in advanced gained knowledge from studies, databases, models, threat intelligence, etc I mentioned before.

For the details and the action the "Standard Operating Procedures" (SOPs) are used. A SOP gives a detailed, technical description of how the attacker can be stopped and/or how additional important information is gained. This steps are often done by the department responsible to run the affected systems in cooperation with the CDC analyst. To make this cooperation smooth the SOP should also define which information the technician needs from the analyst, what tools are used for communication, and maybe also telephone numbers for call duty... and not to forget regular training together.

Playbooks and SOPs should be verified at least once a year with a table-top exercise together with all involved parties. This way it can be made sure the instructions are current and people stay trained. Take a note of who did the training when and also create a Key Performance Indicator (KPI) for trainings so you can steer the continuous improvement of the most important actions: the acute defense!

All Playbooks should have the same format and should have chapters based on the used incident response process. Static content is just information overhead and should be omitted.

A little header should list

- category / type of Incident

- criticality / impact

- step/phase in UKC

- typical actors

- typical additional TTPs

Best would be to have a digital workflow for the Playbook in an incident response tool which creates a new task for the CDC analyst triggered by a SIEM alarm (or another reporting source), is connected to an asset database (CMDB) to lookup additional internal information and is, or is connected to, a ticketing system to create response tasks tickets for other units.

I promised you a "war story", so here we go. When my former employer decided to built up a Security Operation Center they hired various consultants, and some of them where dedicated to write Playbooks and some to create the underlying detection rules. There where two problems with it. First, at the end no-one could say why they implemented exactly this rule set, we fixed it in mapping the existing rules to Mitre ATT&CK and later build every new rule based on it. In addition all Playbooks where written very individually, based on the knowledge and skills of the consultant, they all have a big overhead by copying basic security yada yada yada from one document to another, hiding the real information, and they all lack a common structure. We started the SOC with this and had to spend the fist month after the project rewriting all the documents. This caused a lot of frustration because the analysts want to analyze and not to write documents. At the end we made digital workflows out of the Playbook documents, which was slick and intelligent. Whenever the SIEM reported an alarm the corresponding digital Playbook workflow was started.

And that's it for the CDC basics. Next we will take a look at the regulatory requirements for the finance sector in Germany/Europe and how they affect a CDC and beyond.

Best,

Thomas